In Autumn 2017, I had the privilege of doing a series of lectures on deep reinforcement learning (deep RL) at NTNU, Trondheim. This was open to the public, but mostly attended by students studying AI in some form at NTNU. Covering the basics of RL, recent advances, and challenges that the RL research community deals with, all in just 3 lectures, is an exciting prospect.

It took quite some preperation, and was somewhat exhausting, but hey, I can safely say that I managed to desig and deliver a bootcamp of sorts on deep RL. But that is not the point. The point is to inspire curiosity amongst students, and hope that some would join in the effort to advance RL. It was immensely rewarding.

Below is what we covered.

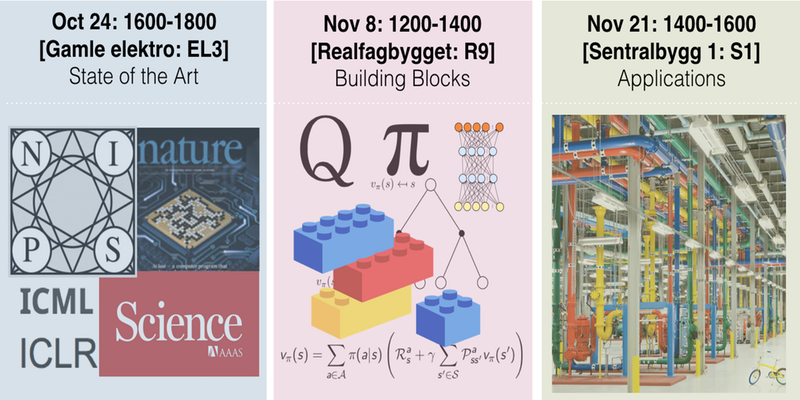

Lecture 1: Introduction and state-of-the-art

Oct 24, 2017, 16:00-18:00 at Gamle elektro: EL3

The first part introduced deep RL and attempted to draw a comprehensive picture of the field as it stood in Autumn 2017. Progress in the field, building up to frontiers, open technical challenges, and attempts to addressing these, were some of the things covered.

Slides here. Video forthcoming.

Lecture 2: Building blocks

Nov 8, 2017, 12:00-14:00 at Realfagbygget: R9

To make working in the field tangible for all, the second part elaborated on the building blocks of deep RL. We examined the theory and intuition behind the different classes of RL algorithms, and the use of deep models within the RL framework to scale these algorithms. This was done in light of the building blocks, so as to inspire the creative pursuit of composing novel agent architectures and training regimens for solving sequential decision making problems.

Slides here. Video forthcoming.

Lecture 3: Applications

Nov 21, 2017, 14:00-16:00 at Sentralbygg 1: S1

We framed various real world control problems as sequential decision making problems, and examined how deep RL provides a fitting solution to these. We also went through some code covering value and policy based methods. The code can be found here. Some exercises that encourage extending the provided code to capture a number of ideas from the previous lecture, which make the algorithms more efficient, were also provided.

Slides here. We did not record this session.

Ongoing rapid advancements in the field can be overwhelming for students just stepping in to deep RL. Bringing some order here for them to navigate the field better, thus enabling them to appreciate and take on some of the outstanding challenges, is something I find very exciting — exciting because in this audience lie the breakthroughs the field needs. Thanks are due to both Telenor Research and the Telenor-NTNU AI Lab for supporting me in this endeavour.